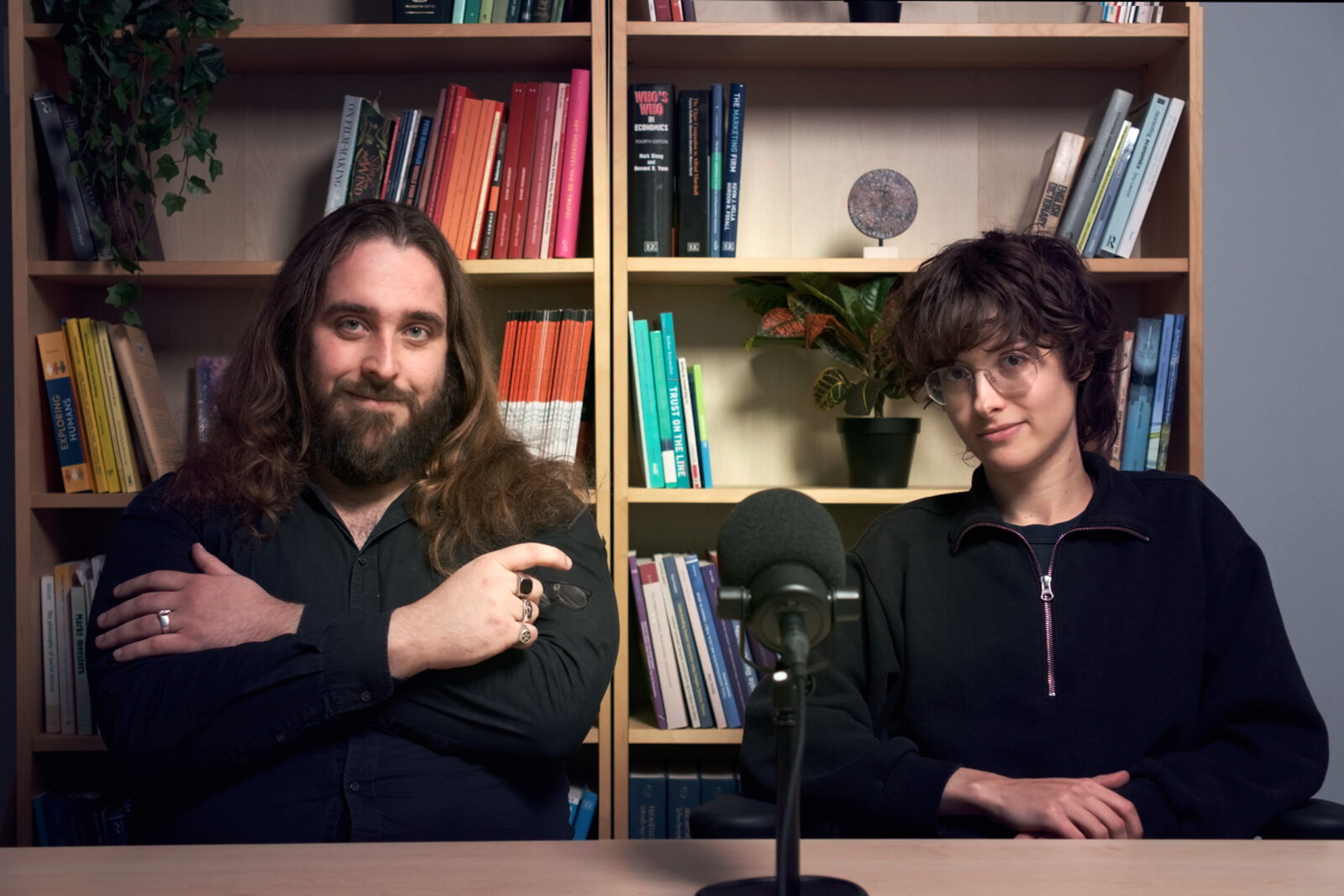

‘Education sector should demand compensation from AI companies’

Higher education should file claims for compensation from the makers of ChatGPT and similar AI programs, says expert Marleen Stikker. “All the work that we carry out in the education and research sectors is affected. The costs are huge.”

Image by: Arie Kers

Artificial intelligence will change higher education and scientific research substantially, but how? And should we just let it all happen? “We should decide for ourselves what we do and don’t find acceptable, rather than just moving with the market.”

Who is Marleen Stikker?

Internet pioneer Marleen Stikker (aged 61) spoke about the future of education at the opening of the academic year at Erasmus University Rotterdam.

She is director of Waag Futurelab in Amsterdam, a design and research centre that focuses on technology and society. She was one of the founders of De Digitale Stad (the digital city), an online community and social media platform. She is also a council member of the Advisory Council for Science, Technology and Innovation (AWTI).

Her book Het internet is stuk – maar we kunnen het repareren (The internet is broken – but we can fix it) was published in 2019. It is about the influence of large companies on the internet. She is Professor of Practice at Amsterdam University of Applied Sciences and holds an honorary doctorate from VU Amsterdam.

Expert Marleen Stikker chooses her words carefully and certainly does not want to give the impression that she is ‘against’ artificial intelligence (AI), but she does come to a radical conclusion: higher education should file a claim for damages from big tech companies.

Read more

Mystification

According to Stikker, there are two movements in the criticism of AI. “One movement talks of existential risks and claims that AI will subjugate humans in the future. This movement mostly comes from the tech industry itself. Sam Altman of OpenAI, for instance, travels all over Europe proclaiming that humanity is at stake.”

Stikker opposes such ideas simply because she finds them unscientific: “They believe AI surpasses human intelligence and pretend it is a power in its own right. Human intelligence is many times more complex and ingenious than computing.” Moreover, Stikker feels that it distracts attention from what is actually going on. “People like Altman are publicly calling for regulations, while his company is lobbying against AI legislation in Europe.”

She believes that this first ‘critical’ movement is mostly a form of advertising. “The AI industry is furthering the mystification and instilling fear. Society no longer knows how to act and is seeking help from the same prophets of doom who threw AI over us.”

Major issues

What about the second movement? “It looks at the major issues and problems of AI that emerge in working practice. We must deal with these now, or AI will undermine our democracy and self-determination. There is no time to waste. We must not allow ourselves to be frightened, but we should ask questions. For instance: whose data does AI train with? This is an issue of power. Scientific and cultural productions have been purloined on improper grounds. There are only a few parties with enough capital to do this, and these are private parties with specific interests.”

Stikker warns that this new form of AI (generative AI) is already being built into social media, work processes and educational programmes. “Many people act as if it’s all inevitable: we can’t afford to ignore AI as that would be unfair to students. But is that really the case? Have we investigated this? Why are we allowing this technology to be thrown into the world without procedures and monitored trials?”

‘They have appropriated data that doesn’t belong to them’

The problem is not that technology catalyses major changes, explains Stikker. “We know that, of course, and that will always be the case. Nor is it a problem that AI presents us with all kinds of fundamental questions, as they are interesting. What is knowledge, what is correlation, what bias is there in data? If you ask ChatGPT a question on a subject that you know a great deal about, then you’ll see what nonsense is sometimes generated.”

According to Stikker, the problem is that the power lies with parties that have until now shown that they cannot handle that power well. “They have appropriated data that doesn’t belong to them. This is also where all those lawsuits come from. Everyone is going to court because those companies have collected all kinds of data from websites and are not bothered about intellectual property or the public domain. They are privatising data that doesn’t belong to them. They also looted Waag Futurelab’s website, for instance, despite the fact that we have a Creative Commons licence, which prohibits commercial use. Media companies such as The Guardian are now blocking companies like OpenAI that steal their content. Universities should do the same.”

Food and pharmaceuticals

People can also read all this information and scientific articles themselves, but if an AI programme reads these, the effects are of a different order. “Just think of the effects of disinformation. It’s one problem on top of another. We would never accept this, for instance, if it concerned innovations in the pharmaceutical industry. In this industry we have put certain protection mechanisms in place: you conduct an extensive trial, you carefully monitor the effects, you have an ethics committee. The industry also has supervisory bodies such as the Medicines Evaluation Board (CBG in Dutch) and the European Medicines Agency (EMA). With regard to innovations in the pharmaceutical industry we do not say: we have to go along with these immediately or we may miss the boat.”

According to Stikker, the great danger in the education sector is not that students will occasionally use ChatGPT to write a reasonable essay. This is of secondary importance. “I wish that the education sector were to say: whose technology is it anyway? The education sector should help determine what is and isn’t possible with ChatGPT. We currently show the wrong reflex: oh dear, how is this affecting our scientific methodology and our tests? We are far too compliant.”

Read more

-

Examination boards regard ChatGPT as fraud

Gepubliceerd op:-

Education

-

Damage

In fact, Stikker believes that the scientific community and the education sector should sue the tech companies. “They have absorbed scientific knowledge on improper grounds and are putting a flawed product on the market that is damaging higher education.”

And Stikker believes that the problem was foreseeable, but companies did not take heed of this. “All the work that we carry out in the education and research sectors is affected. And how do you maintain the integrity of science? The costs are huge. I think it would be interesting to explore whether a claim can be made.”

No ban

Stikker emphasises that this does not mean that she is against this new AI. “I do not want to appeal for a ban on AI. But we do need to get a grip on the process by which we introduce new technology into society.”

AI does, of course, raise all sorts of interesting questions. “And that’s exactly what I like about it. We need to discuss this together in the education sector: perhaps we need to start testing in a different way? We now often get students to write essays along the lines of ‘A says this, B says that, and my conclusion is’. Perhaps we can do this differently and put more emphasis on creative writing rather than academic writing. And are we asking the right questions? Wouldn’t it be better to ask students to design something rather than write an essay? This is fairly common in technical studies, but would also be possible for social sciences and humanities.”

Read more

-

ChatGPT teaches us not to think

Gepubliceerd op:-

Column

-

Data and truth

The new technology also raises all sorts of interesting questions about facts and truth. “It involves complex issues that you can discuss with students. It is very important that they understand that data and algorithms are not neutral. We often skip these questions, even though they are fundamental to understanding what you are working with. This is also found in other fields of study, such as economics, for instance: how do you measure prosperity?”

‘Why do we really think we can reduce reality to data?’

Another issue is: what do we define as intelligence? “It is called artificial intelligence, but it is actually computing, i.e. data processing. That’s actually what I find most exciting about AI: the assumptions about intelligence and awareness. Why do we think that we can reduce reality to data? Programmes always include the warning: they could be wrong. In this way they are evading their responsibility, which is something you can’t do as a human being.”

She believes that to better understand this, the various sciences should cooperate more. “Ultimately, it is no longer a question of: this is natural sciences, that’s humanities and that’s social sciences. You should see – from a range of disciplines – how issues are linked with one another. Students have to learn to question those algorithms together.”

Funding

Stikker believes that the funding of research on AI essentially also needs to be revamped. “Currently, we can only conduct large-scale research if the business community helps fund it. A trade union, for instance, does not have the resources to finance this research. That has an effect on the question posed. The victims of the Dutch childcare benefits scandal cannot access data or AI systems to monitor the government.”

Stikker is a council member of the Advisory Council for Science, Technology and Innovation (AWTI). Could this advisory body not deal with this? Stikker stresses that she is speaking in a personal capacity and not on behalf of the AWTI. “But several recommendations are certainly in line with this.”

She refers to a recent report on ‘recognition and rewards’ and the quality of science. Moreover, the AWTI is working on an advisory report on innovation in the social sciences and humanities, due to be published early next year. “Also in these disciplines we cannot ignore AI.”

Language model

We need to explain to students that generative AI is a language model. “It generates text and images based on computing, but there is no real meaning. The models produce a sort of Reader’s Digest of various data sources and not a truth.”

Read more

-

In this minor, students have to write an essay using ChatGPT

Gepubliceerd op:-

Education

-

De redactie

Latest news

-

Taskforce: Higher education must stand up for Jewish students and staff

Gepubliceerd op:-

Campus

-

-

Rotterdam movie talent offer an emotional rollercoaster at RTM day

Gepubliceerd op:-

Culture

-

-

Art installation POND tells whether the campus pond water is healthy

Gepubliceerd op:-

Campus

-

Comments

Comments are closed.

Read more in Education

-

Student with dyscalculia denied dispensation for statistics exams

Gepubliceerd op:-

Education

-

-

First Philosophy: a philosophy podcast for beginners and advanced listeners

Gepubliceerd op:-

Education

-

-

Adjusted internationalisation bill sent to Council of State

Gepubliceerd op:-

Education

-